Overview

Archiving SPC-focus products. There are two major categories (global and regional) with two subdivisions (topography and backplanes -- aka other data). The regional products are derived from bigmaps.

Tools

These tools are stored in GitHub as archivingTools.

convertLatLon - Reads an X,Y,Z (or X,Y,Z,V) list and converts it into lat, lon, distance or lat, lon, value

backplanesGMT - Reads a bigmap and generates a list of latlon, radius, albedo, slope. Use paste latlon radius > tmp for GMT.

backplanesISIS - Reads a bigmap and generates 2D matrix for radius, albedo, slope, lat, lon

phasei - Reads a bigmap and image (the SUMFILE) and generates 2D matrix for incidence, emission, phase angles

- coverage_p_low -- Reads all images and maplets. Creates a pgm that has every pixel marked for every image that it has, counter is only 1.

map_coverage_p_low - Counts the number of images that cover each bigmap pixel. Creates MAPNM-cov.txt and MAPNM-cov.pgm

bustGrid - Takes an ascii grid output and puts each entry on its own line. Needed to take grid and paste it with lat/lon to make grid

globalStat - Renamed version of coverage_p_low that does more (JRW not sure 29 Nov 2021)? Output test version of coverage and max res of images, as well as png of coverage

Grid to GeoTIF

var=radius.ll new=big3 argR="-R280/287/-9/-3" argR="-R0/360/-90/90" argI="-I0.04" date echo "Blockmean" gmt blockmean $var $argR $argI > out echo "sphereinterpolate" cat out | gmt sphinterpolate $argR $argI -Gtest.nc echo "gdal translate" gdal_translate -of GTiff -b 1 -a_srs support/60300.prj NETCDF:test.nc $new.tif

Note: gdal_translate doesn't define everything that is needed because the test.nc projection is not defined. Use the following instead. Below is an example for the Moon (Luna), where several important objectives are accomplished.

The generated GeoTIFF is ready for ingestion into a PDS archive because it will use BandSequential format, and it will generate an example xml label which will have many hard-to-determine details like the header byte offset and the data type (i.e. LSB vs MSB).

- Trent Hare at USGS (personal communication on 02 Mar 2022) says in the planetary community usually uses square pixels, even though GeoTIFFs can handle rectangular pixels. Note that with the projections definitions (point 3.) the units of -tr will be meters. So -tr 1 1 will be 1 meter square vertices.

- gdalwarp defines the projection of the source file (i.e. -s_srs) and output/target file (i.e. -t_srs). I sent Trent Hare the first 10 lines of the input file to GMT and the tmp.nc and on 09 Mar 2022 he determined the source projection is a Cartographic system in degrees, so we can use 30100.prj. The example for 30110.prj is an Equidistant Cylindrical projection.

gdalwarp -of PDS4 -co IMAGE_FORMAT=GEOTIFF -r bilinear -tr $metPx $metPx -s_srs support/30100.prj -t_srs support/30110.prj NETCDF:tmp.nc ${bigmap}-${type}-v${ver}.xml

Also note that you can check the details of a GeoTIFF with gdalinfo and gdalsrsinfo.

Global

Global ICQ resolution to bin sizes [degrees]

ICQ Q Value |

Vertices |

Bin Size [deg] |

512 |

1,579,014 |

0.041 |

256 |

396,294 |

0.164 |

128 |

99,846 |

0.649 |

64 |

25,350 |

2.556 |

32 |

6,534 |

9.917 |

Topography

/bin/cp -f SHAPE.TXT tmp vi tmp 1,%s/D/E/g # Remove first line of shape if it has the Q size, replace D with E ZZ convertLatLon tmp > radius.ll # If you are not using the "1" option, "tmp" needs to be 3 columns, instead of 4.

Commands developed while talking with Trent Hare 22 Aug 2022.

GMT pads with half a pixel, so for a product with 1 deg bins, need to trim 0.5 deg. 0.3 deg bins would trim 0.15 deg. Example below is for a product for Phoebe with 1 deg bins where the output from covertLatLon is radius128.ll

var=radius128.ll argR=-R0.5/359.5/-89.5/89.5 # trim per above; gives a warning when there are more than two decimal places, but the end product is still good. argI=-I1.0 # 1 deg bins gmt blockmean $var $argR $argI > out # This step may not be needed, but keep for now cat out | gmt sphinterpolate $argR $argI -GtestRadius128Trim.nc

Make a cube with Long from 0 to 360. Phoebe is 106.5 km in radius. Circumference divided by 2 gives the distance in meters from center to western/eastern end. Circumference divided by 4 gives distance in meters from equator to pole. -a_ullr is upper left pixel in -long lat and lower right pixel in lon -lat. BOUNDING_DEGREES is what is put in the label (remember that GMT pads by half a pixel, so we are not generating data when there is none). May need to contact Trent Hare to get the correct prj file

gdal_translate -of ISIS3 -co TARGET_NAME=Phoebe -co FORCE_360=True -a_srs support/60915FromTrent.prj -a_ullr -334579.61760731 167289.808803656 334579.61760731 -167289.808803656 -co BOUNDING_DEGREES=0,-90,360,90 NETCDF:testRadius128Trim.nc out_testRadius128Trim.cub

The above command should have GDAL write the label correctly, but apparently there is a bug in the code. My version is more recent than Trent's version, so it shouldn't be a version problem. So now we use ISIS to make a correct ISIS label.

First set up the MAP parameters. Note that for 0 to 360 clon=180, this is because ISIS isn't using clon (it's a misnomer), but is really setting x=0. -180 to 180 would use clon=0. Resolution is pixels/deg, so for 0.3 deg bins you would enter 3.3333 here.

maptemplate map=smp180.map projection=simplecylindrical clon=180 targopt=user targetname=Phoebe eqradius=106500 polradius=106500 resopt=ppd resolution=1

The below will overwrite the given file. Note this is mapping a single pixel (sample and line are always 0.5, 0.5 since that refers to the top left corner of the top left pixel, and maps to lat 90 lon 0.)

maplab from=out_testRadius128Trim.cub map=smp180.map sample=0.5 line=0.5 coordinates=latlon lat=90 lon=0

The above gives a working cube, now to turn it into a geotiff. We use gdal_translate again. To make the label a bit cleaner, could use -tr 1858.77565 1858.77565 (which comes from gdalinfo on the cube and truncating the Pixel Size).

gdal_translate -of PDS4 -co IMAGE_FORMAT=geotiff out_testRadius128Trim.cub out.lbl

Follow up with using vi to edit the <cart:pixel_scale_x unit="pixel/deg">0.999999999999978906</cart:pixel_scale_x> value to be 1 so the label looks nicer.

Sigmas

convertLatLon tmp 1 > sigma.ll

- Use sigma.ll as the input to GMT

After you have the cube, change values of "-1" (which represent 1 or 0 maplets) to "9999"

fx f1=phoebe_sigma_tmp.cub to=phoebe_sigma_c.cub equation="(9999-f1)*(f1<0) + f1" // Use GUI for this since command line will fail

Number of Images

- Run globalStat

- Use global-cov.ll as the input to GMT

var=global-cov.ll new=tCov argR="-R0/360/-90/90" argI="-I1"

Best Image Resolution

- Run globalStat

- Use global-res as the input to GMT

var=global-res.ll new=tRes argR="-R0/360/-90/90" argI="-I1"

Best Maplet Resolution

- Use coverage and choose min/max ranges that bracket all the different maplets resolutions. So if you have 8 different resolutions, you'll have 8 different pgm files. Note that while coverage/SPC works in West longitude, when writing out to an image the presentation doesn't matter if it's West or East longitude since they will look the same. West longitude just starts with 0 in the East and increases West, while East Longitude starts with 0 in the West and increases to the East.

- For each pgm file, use USGS ISIS to turn it into a cube where each pixel has the value of the resolution in meters, and the rest of the pixels have a 9999 meters.

- Change pgm to cube

- Turn all DN values of 0 to (9999-resolution), and all non-zero DN to 0. The "==" logic comparison returns 1 if true and 0 if false

- Add maplet resolution to all DN values

std2isis fr=Phoebe0p00-0p13Maplets.pgm to=Phoebe0p00-0p13Maplets.cub fx f1=Phoebe0p00-0p13Maplets.cub to=Phoebe0p00-0p13flip.cub equation=(9999-125)*(f1==0) // Here the maplet resolution is 125 ; command line will error out, need to use GUI fx f1=Phoebe0p00-0p13flip.cub to=Phoebe0p00-0p13res.cub equation=f1+125 ... std2isis fr=Phoebe1p10-2p00Maplets.pgm to=Phoebe1p10-2p00Maplets.cub fx f1=Phoebe1p10-2p00Maplets.cub to=Phoebe1p10-2p00flip.cub equation=(9999-1500)*(f1==0) // Here the maplet resolution is 1500 fx f1=Phoebe1p10-2p00flip.cub to=Phoebe1p10-2p00res.cub equation=f1+1500

- Now take the minimum value from all the different cubes

fx f1=Phoebe0p00-0p13res.cub f2=Phoebe0p13-0p29res.cub to=Phoebe0p00-0p29.cub equation=min(f1,f2) // ; command line will error out, need to use GUI ... fx f1=Phoebe0p00-1p10.cub f2=Phoebe1p10-2p00res.cub to=Phoebe0p00-2p00.cub equation=min(f1,f2)

Slope

Albedo

/bin/cp -f SHAPEA.TXT tmp vi tmp 1,$s/D/E/g ZZ convertLatLon tmp 1 > albedo.ll

Regional

Run backplanesGMT (which is in ORExSPCsupport) (or backplanesISIS)

Topography

- Run backplanesGMT

# Make the backplanes name=FIRED1 ~/bin/backplanesGMT FIRED1 // give bigmap name 1737.4 // give datum, 1737.4 is for Luna

Output:

Version: 1.50000000

Input map name (only 6 char no MAPFILES and .MAP)

BIGMAP position

V 120.38036346435547 -510.48150634765625 -54.750602722167969

Ux -2.9186813160777092E-002 0.12163921445608139 -0.99214518070220947

Uy 0.97239929437637329 0.23332308232784271 0.0000000000000000

Uz 0.23149037361145020 -0.96476125717163086 -0.12509183585643768

QSZ 99

Lon 279.612366 286.917664

Lat -9.55961323 -2.35706925

<Deprecated> We are currently not using blockmean and sphinterpolate, but this section is maintained as another set of procedures.

We'll need the Lon and Lat range for "argR", and QSZ will give an idea of what you need for "argI".

# Convert them into lon/lat/val paste $name-lonlat.txt $name-r.txt > tmp.ll var=tmp.ll argR="-R279.612366/286.917664/-9.55961323/-2.35706925" argI="-I199+n" # Run the rebinning/interpolating routines echo "Blockmean" gmt blockmean $var $argR $argI > out echo "sphereinterpolate" cat out | gmt sphinterpolate $argR $argI -Gtest.nc

Now you need some math to convert the Lon range number of nodes (i.e. "argI") to deg/px. (286.917664 - 279.612366) / 199 = 0.03671

# Convert to geoTiff echo "gdal translate" degPx=0.0367 gdal_translate -of ISIS3 -tr $degPx $degPx -r bilinear -b 1 -co TARGET_NAME=Tethys -co DATA_LOCATION=GEOTIFF -a_srs support/60300.prj NETCDF:test.nc $name-r-ISIS.lbl

If you don't want to make it readable by ISIS, and don't care about square pixels, you can run the following

gdal_translate -of GTiff -b 1 -a_srs support/60300.prj NETCDF:test.nc $name-r.tif

See above in "Grid to GeoTIF" for details, but a better program to use is gdalwarp so you can properly define the projection. Plus this allows the pixel size to be defined in meters, which is cleaner. The example below if for the Moon (Luna)

gdalwarp -of PDS4 -co IMAGE_FORMAT=GEOTIFF -r bilinear -tr $metPx $metPx -s_srs support/30100.prj -t_srs support/30110.prj NETCDF:tmp.nc ${bigmap}-${type}-v${ver}.xml

How to trim size

Use ArcMap to find the new lat and lon ranges

- Remake geotiff with new lat and lon ranges

- Measure the size of the lat range. Divide this number by the GSD from SPC to get number of pixels.

- Divide number of pixels by lon range to get degPx

- Update config.txt with these new values (i.e. lat and lon ranges, number of pixels across, degPx)

- Make geotiff again

</Deprecated> End section on blockmean and sphinterpolate

Current procedures for generating Geotiff

JRW has a shell script that will make these products, and their PDS label. It is found in /usr/local/spc/bin/makeGeoTiffBigNneighbor.sh. The shell script isn't needed, but can make the process easier.

Make a config.txt file for the shell script that looks something like this...

<rand> INGV02$ cat config.txt BIGMAP=INGV02 ARGR=-R161.625107/161.997177/-35.9918938/-35.6902657 ARGI=-I1821+n METPX=5 PROJECT=30110 BODY=Moon RUNALL=No VERSION=1

METPX is the GSD in meters for the bigmap. Project 30110 is for Equidistant_Cylindrical on the Moon. Project 30118 is North_Pole_Stereographic if you ever make a North Polar region.

# Make input for GMT bigMapName=BSTDTM paste $bigMapName-lonlat.txt $bigMapName-r.txt > tmp.ll var=tmp.ll

gmt nearneighbor $var -Gtmp.nc $argI $argR -S0.001d -N16+m8

# Make normal GeoTiff

echo "gdal warp"

gdalwarp -of PDS4 -dstnodata -3.40282306073709653e+38 -co IMAGE_FORMAT=GEOTIFF -r bilinear -tr $metPx $metPx -s_srs support/30100.prj -t_srs support/$proj.prj NETCDF:tmp.nc ${bigmap}-${type}-v${ver}.xml

#Make GeoTiff readable by ISIS

gdalwarp -of ISIS3 -dstnodata -3.40282306073709653e+38 -tr $metPx $metPx -r bilinear -co TARGET_NAME=$body -co DATA_LOCATION=GEOTIFF -s_srs support/30100.prj -t_srs support/$proj.prj NETCDF:tmp.nc ${bigmap}-${type}-ISIS-v${ver}.lblgmt nearneighbor only writes out a value if data points exist in the specific sectors, then makes an average of the nearest values in those sectors. -S.001d is the search radius of each sector (in the example it is .001 degrees) and -N16+m8 means there are 16 sectors and there must be values in 8 of those sectors to write out a value.

Project 30100 is the data in degrees. Note that if you've previous run ISIS by using "conda activate isis" in the window you are using, then gdalwarp will throw a project error. This is true even if you've run "conda deactivate", so best to just start with a fresh window.

The "-dstnodata" option in gdalwarp is to use a small number for "no data" instead of NaN. NaN is not compatible with PDS4.

prj files can be found here

IMPORTANT: After you've make the geoTiff and it's label, the label is still not ready to go because there will be placeholder values. To fix those, use a script Trent Hare sent JRW on 27 June 2022, which works for LRO data. PDS4_replaceStrings.py into the folder (note it will change ALL xml files in the directory and ALL subdirectories) and run it with "python PDS4_replaceStrings.py". The python file for LRO products can be found here

How to create bigmap products and labels for ISIS cubes

Make the cubes using backplanesISIS and ascii2isis. Now make the cubes Band sequential, which is something ascii2isis v4.1.1 cannot do, despite the ISIS manual saying it can. Instead use cubeatt. Also need to write the files out with a specific filename structure. This filename structure will be used by the shell scripts to make csv files readable by OLAF.

This will be done for the topography, latitude, longitude, and photocube products. I give an example of the conversion for the photocubes below.

bigmap=KAR543

dir=/Users/JW/Dropbox/PDS_LDAP16/products/isis/Karpinskiy/orig/$bigmap

while read img ; do

cubeatt from=img-${img}/IoverF-${bigmap}.cub to=${dir}/${bigmap}_${img}_IoverF.cub+BandSequential

cubeatt from=img-${img}/rawP-$bigmap.cub to=${dir}/${bigmap}_${img}_a.cub+BandSequential

cubeatt from=img-${img}/$bigmap-localI.cub to=${dir}/${bigmap}_${img}_i.cub+BandSequential

cubeatt from=img-${img}/$bigmap-localE.cub to=${dir}/${bigmap}_${img}_e.cub+BandSequential

done < lastFourList_$bigmapNext is to make the label. We use a shell script to generate a csv file, and then upload the cube and csv file into OLAF. To use the shell script, create a config.txt that looks similar to this...

<rand> KAR543$ cat config.txt OBJECT=Moon BIGMAP=KAR543 AUTHLIST="Weirich, J.R." OBSSYSBOOK="LROC" TARGETNAME="Moon" TARGETTYPE=Satellite STARTTIME="N/A" STOPTIME="N/A" REFKEY="N/A" VER=v1 SAMPLES=1015 LINES=1015 VERTPXSCALE=5 HOZPXSCALE=5 WEST=167.284134 EAST=167.880630 SOUTH=73.4460144 NORTH=73.6149368 SPHNAME=Moon_2000 AAXIS=1737400 BAXIS=1737400 CAXIS=1737400 LOCALDESC="This file is simple in a local reference frame and thus not in a map projection. Within cart:local_georeference_information we provide the needed vectors such that a transformation could be applied to this file." GEOINFO=""Vector" is the radius vector that extends from the center of the object to the middle vertex of the DTM. It is in Cartesian space with the first number being in the direction of x, the second number the direction of y, and the third number the direction of z. 0 West Longitude defines the positive x-axis, while north pole is the positive z-axis. 270 West Longitude defines the positive y-axis. The plane of the DTM is determined by the local slope. This plane of the DTM is defined by two horizontal unit vectors (i.e. Ux and Uy) and a vertical unit vector (i.e. Uz). Each of these unit vectors are defined in the same Cartesian space as the radius vector, where again the first number is in the direction of x, the second number the direction of y, and the third number the direction of z. # Vector -480.19241333007812 105.74529266357422 1663.2192382812500 # Ux -0.94595688581466675 0.21812622249126434 -0.23997193574905396 # Uy -0.22469174861907959 -0.97442990541458130 0.0000000000000000 # Uz -0.23383583128452301 5.3919713944196701E-002 0.97077983617782593 "

Vector and Ux, Uy, and Uz come from dumpMapHeaders.

Next is the run shell scripts. For the topography, lat, and lon cubes, you'll use productInfoOLAF-isis-unprojected-topocubes.sh with a command variable giving the directory location of config.txt. For the photometric cubes use productInfoOLAF-isis-unprojected-photocubes.sh with command variables of the directory location and a file with the name of all the images.

Once you have the cube in PDS format and a csv file, upload these to OLAF. Use the "2-D Array Images" Product, and upload everything.

Slope

* See topography

Albedo

* See topography

Sigmas

- Run backplanesGMT (this gets lon/lat only file)

- Take the SIGMAS.pgm and convert it to lon/lat/val files

name=LEADEQ std2isis from=SIGMAS.pgm to=tmp.cub isis2ascii from=tmp.cub to=tmp1.txt sed '1,2d' tmp1.txt > tmp2.txt ~/bin/bustGrid tmp2.txt > $name-sig.txt paste $name-lonlat.txt $name-sig.txt > sigma.ll var=sigma.ll

- Continue with topography script

Number of Images

- run map_coverage_p_low

- RESLIM should be 3x best maplet GSD

- Be sure to exclude limb images using coverage_p.in

- Convert coverage out to lon/lat/value

name=LEADEQ bustGrid $name-cov.txt > tmp1.txt paste $name-lonlat.txt tmp1.txt > imgNum.ll var=imgNum.ll

* Continue with topography script

Best Image Resolution

- run map_coverage_p_low

- RESLIM should be high enough that there are no holes in coverage

- Be sure to exclude limb images using coverage_p.in

- Convert resolution out to lon/lat/value

name=LEADEQ bustGrid $name-res.txt > tmp1.txt paste $name-lonlat.txt tmp1.txt > imgRes.ll var=imgRes.ll

* Continue with topography script

Photometric Data (Also works for I/F)

This includes emission angle, incidence angle and phase angle. It also works for I/F on the output from rawMOSAIC.

Run phasei (located in ORExSPCsupport)

Run process similar to Number of Images (i.e. use "bustGrid" and "paste")

Uploading Products to OLAF

Uploading Global Cubes to OLAF

To start, make sure you've converted the cubes to BandSequential. Use the USGS ISIS program cubeatt (i.e. cube attributes). Search this page for "BandSequential" or "cubeatt" for instructions.

After that you'll need to make the "product_info.csv" for these cubes. You'll use productInfoOLAF-isis-global.sh along with a config.txt file. Note that all the values in this example are for a Q=512 ICQ, while the assessment products (albedo, best map, maxres, numimg, sigma) are all 1 degree bins. Config.txt looks something like this...

OBJECT="Saturn IX (Phoebe)" OUTNAME=Phoebe OUTID=_c AUTHLIST="Weirich, J.R." OBSSYSBOOK="ISIS-WAC; ISIS-NAC" TARGETNAME="Phoebe" TARGETTYPE=Satellite STARTTIME="N/A" STOPTIME="N/A" REFKEY="N/A" VER=v1 SAMPLES=2222 # This will stay the same for all Q=512 models LINES=1111 # This will stay the same for all Q=512 models VERTPXSCALE=300 # This will be different for each body, to calculate use SQRT(4*Pi*Radius^2/1579014) where Radius is in meters. HOZPXSCALE=300 # Same as for VERTPXSCALE

Choose "2D Array Image", and then follow the instructions.

Uploading Regional Cubes to OLAF

Similar to uploading global cubes, but the label creation follows a slightly different path. For regional photocubes the "product_info.csv" is generated using a shell script with a name something like productInfoOLAF-isis-unprojected-photocubes.sh along with a config.txt file. Same thing for the topocubes, but since the labels are different we use productInfoOLAF-isis-unprojected-topocubes.sh. For both shell scripts, config.txt looks something like this...

OBJECT=Mercury BIGMAP=NF2ED1 OUTNAME=nafn358706425 OUTID=_c AUTHLIST="Weirich, J.R.; Palmer, E.E." OBSSYSBOOK="MDIS-WAC; MDIS-NAC" TARGETNAME="Mercury" TARGETTYPE=Planet STARTTIME="N/A" STOPTIME="N/A" REFKEY="N/A" VER=v1 SAMPLES=1335 LINES=1335 VERTPXSCALE=60 HOZPXSCALE=60 WEST=63.07554 EAST=65.42407 SOUTH=34.92059 NORTH=36.80719 SPHNAME=Mercury AAXIS=2439700 BAXIS=2439700 CAXIS=2439700 LOCALDESC="This file is simple in a local reference frame and thus not in a map projection. Within cart:local_georeference_information we provide the needed vectors such that a transformation could be applied to this file." GEOINFO=""Vector" is the radius vector that extends from the center of the object to the middle vertex of the DTM. It is in Cartesian space with the first number being in the direction of x, the second number the direction of y, and the third number the direction of z. 0 West Longitude defines the positive x-axis, while north pole is the positive z-axis. 270 West Longitude defines the positive y-axis. The plane of the DTM is determined by the local slope. This plane of the DTM is defined by two horizontal unit vectors (i.e. Ux and Uy) and a vertical unit vector (i.e. Uz). Each of these unit vectors are defined in the same Cartesian space as the radius vector, where again the first number is in the direction of x, the second number the direction of y, and the third number the direction of z. # Vector 857.78729248046875 1778.3778076171875 1427.5522460937500 # Ux 0.24726431071758270 0.51332724094390869 -0.82180017232894897 # Uy -0.90092843770980835 0.43396770954132080 0.0000000000000000 # Uz 0.35663473606109619 0.74038314819335938 0.56977581977844238 "

Choose "2D Array Image", and then follow the instructions.

Uploading geoTiffs to OLAF

Add LIDs using "External Products" under "Add Data Products". Add a LID such as "urn:nasa:pds:lunar_lro_lroc_topography_domingue_2022:data:ings358416181_topo_noslope_masked" and then upload the the tif and xml files. I think you will still need to add the files to the bundle after Jesse sends you the zip/folder. You should also add the instrument info to the label since GDAL won't add that. This instrument info will have to be added to OLAF manually by the PDS, but if you are using OLAF they will have done this for the bundle, so you will be able to copy if from other labels generated by OLAF.

<Investigation_Area> // This should already be added by the current procedures, which utilize a python script.

<name>MESSENGER</name> // This should already be added by the current procedures, which utilize a python script.

<type>Mission</type> // This should already be added by the current procedures, which utilize a python script.

<Internal_Reference> // This should already be added by the current procedures, which utilize a python script.

<lid_reference>urn:nasa:pds:context:investigation:mission.messenger</lid_reference> // This should already be added by the current procedures, which utilize a python script.

<reference_type>data_to_investigation</reference_type> // This should already be added by the current procedures, which utilize a python script.

</Internal_Reference> // This should already be added by the current procedures, which utilize a python script.

</Investigation_Area> // This should already be added by the current procedures, which utilize a python script.

<Observing_System>

<Observing_System_Component>

<name>MESSENGER</name>

<type>Host</type>

<Internal_Reference>

<lid_reference>urn:nasa:pds:context:instrument_host:spacecraft.mess</lid_reference>

<reference_type>is_instrument_host</reference_type>

</Internal_Reference>

</Observing_System_Component>

<Observing_System_Component>

<name>MERCURY DUAL IMAGING SYSTEM NARROW ANGLE CAMERA</name>

<type>Instrument</type>

<Internal_Reference>

<lid_reference>urn:nasa:pds:context:instrument:mdis-nac.mess</lid_reference>

<reference_type>is_instrument</reference_type>

</Internal_Reference>

</Observing_System_Component>

</Observing_System>

<Observing_System>

<Observing_System_Component>

<name>MESSENGER</name>

<type>Host</type>

<Internal_Reference>

<lid_reference>urn:nasa:pds:context:instrument_host:spacecraft.mess</lid_reference>

<reference_type>is_instrument_host</reference_type>

</Internal_Reference>

</Observing_System_Component>

<Observing_System_Component>

<name>MERCURY DUAL IMAGING SYSTEM WIDE ANGLE CAMERA</name>

<type>Instrument</type>

<Internal_Reference>

<lid_reference>urn:nasa:pds:context:instrument:mdis-wac.mess</lid_reference>

<reference_type>is_instrument</reference_type>

</Internal_Reference>

</Observing_System_Component>

</Observing_System>

<Target_Identification> // This should already be added by the current procedures, which utilize a python script.

<name>Mercury</name> // This should already be added by the current procedures, which utilize a python script.

<type>Planet</type> // This should already be added by the current procedures, which utilize a python script.

<Internal_Reference> // This should already be added by the current procedures, which utilize a python script.

Running GMT on the PSI Hawk Cluster

To begin making projected geoTiffs for ArcGIS and ISIS, start with the txt files generated when making the photometric cubes. The scripts used produce the following txt files:

IoverF-<bigmap>-<img>.txt (Ex. IoverF-LEADHL-N1563651588.txt)

- LEADHL-a.TXT

- LEADHL-e.TXT

- LEADHL-i.TXT

The above files need to be uploaded to the Hawk cluster, while keeping their parent directory structure.

Go to the photocube directory for each bigmap

rsync -hapvP --exclude=*.cub --exclude=*.prt N1* [email protected]:/home/jweirich/Tethys/geoTiffTethys/LEADHL/

Also copy the output from backplanesGMT and config.txt

Ex. config.txt

BIGMAP=LEADHL ARGR=-R226.209259/261.399750/3.66536975/33.9945526 ARGI=-I801+n DEGPX=0.0378641483770287 PROJECT=60300 BODY=Tethys RUNALL=No VERSION=1

The basic command being run is similar to the Topography step above:

echo "Blockmean"

gmt blockmean $var $argR $argI > ${product}out

echo "sphereinterpolate"

cat ${product}out | gmt sphinterpolate $argR $argI -G${product}.nc... where $product is going to be IoverF, a, e, or i. Everything else is just a wrapper to deconflict all the files since we're running in parallel.

I have the GMT commands above in a script called makeaGeoBig.sh which reads most of the inputs from config.txt. makeGeoBig.sh is called by makeIoverFGeoBig.sh, makePhaseGeoBig.sh, makeEmissGeoBig.sh, and makeIncGeoBig.sh

The key lines in these scripts are: (Ex. below is for Incidence)

echo "getting ready for GMT ..." ~/bin/bustGrid $bigmap-i.TXT > bustedi-$bigmap-$img.txt paste ../$bigmap-lonlat.txt bustedi-$bigmap-$img.txt > $var echo "calling makeGeoTiffBig.sh" sh ~/bin/makeGeoTiffBig.sh $var $product

$product is as before, while $var is IoverF.ll, phase.ll, emiss.ll, or inc.ll

The above four scripts are called by run4.sh, which is essentially a copy/paste script with different images. Note the use of & and "wait". & is to get multiple processors running on each machine, and "wait" is to keep the script running until all four processes complete.

The key lines from run4.sh look like this:

cd $img sh ~/bin/makeIoverFGeoBig.sh $img & sh ~/bin/makePhaseGeoBig.sh $img & sh ~/bin/makeEmissGeoBig.sh $img & sh ~/bin/makeIncGeoBig.sh $img & cd .. wait

Since there are 8 processors per Hawk node, we want to call 2 images per node. To do this we have a script that uses sbatch commands. Here's what one of those looks like:

#\!/bin/bash # #SBATCH -J Call1.sh # Job name #SBATCH -t 2-0:00 # time (D-HH:MM) #SBATCH --partition=cpu #SBATCH -o slurm.%N.%j.out # STDOUT #SBATCH -e slurm.%N.%j.err # STDERR sh ~/bin/run4.sh N1563651588 & sh ~/bin/run4.sh N1563651648 & wait

Rather than type each by hand, I instead have a script (makeScript.sh) to make a bunch of these:

# 29 Sep 2021 - John R. Weirich

# Script to call different photo scripts

imgList=$1

if [ -z $imgList ]; then

echo "Please select a list of images"

echo "Usage: <program> <Image List File>"

exit

fi

list=`cat $imgList`

top=1

count=0

rm -f Call*.sh

for i in $list

do

if [ $top = "1" ]

then

let count=$count+1

out="Call$count.sh"

echo "#!/bin/bash" > $out

echo "#" >> $out

echo "#SBATCH -J $out # Job name" >> $out

echo "#SBATCH -t 2-0:00 # time (D-HH:MM)" >> $out

echo "#SBATCH --partition=cpu" >> $out

echo "#SBATCH -o slurm.%N.%j.out # STDOUT" >> $out

echo "#SBATCH -e slurm.%N.%j.err # STDERR" >> $out

echo " " >> $out

echo "sh ~/bin/run4.sh $i &" >> $out

top=2

else

echo "sh ~/bin/run4.sh $i &" >> $out

echo " " >> $out

echo "wait" >> $out

top=1

fi

done

if [ $top = "2" ]

then

echo " " >> $out

echo "wait" >> $out

fiTo fire everything off, you'll make a copy/paste that will look like this:

sbatch Call1.sh sbatch Call2.sh etc.

Once all those processes are finished, you'll have converted each *.txt file into a *.nc file. Now pull that back down to your local machine to run through GDAL.

rsync -hapvP --prune-empty-dirs --include="*/" --include="*.nc" --exclude="*" [email protected]:/home/jweirich/Tethys/geoTiffTethys/LEADHL/ .

Once downloaded, run

sh ../bin/wrapperAfterClusterImage.sh imgListLEADHL

wrapperAfterClusterImages.sh is this:

# 30 Sep 2021 - John R. Weirich

# Wrapper to turn all the *.nc into geoTIFFS

imgList=$1

if [ -z $imgList ]; then

echo "Please select a list of images"

echo "Usage: <program> <Image List File>"

exit

fi

list=`cat $imgList`

for i in $list

do

cd $i

sh /usr/local/spc/bin/afterClusterImage.sh IoverF $i

sh /usr/local/spc/bin/afterClusterImage.sh a $i

sh /usr/local/spc/bin/afterClusterImage.sh e $i

sh /usr/local/spc/bin/afterClusterImage.sh i $i

cd ..

doneAnd afterClusterImage.sh is this:

# 28 Sep 2021 - John R. Weirich

# Make the various geoTiffs using GDAL

# Run from geoTiff[directory]/

# 30 Sep 2021: Began modifying, then undid changes (hopefully they are undone correctly!)

# Usage in geoTiff directory : sh /usr/local/spc/bin/afterClusterBigmap.sh <product/type name> <image>

type=$1

img=$2

if [ -z $type ]; then

echo "Please select the type"

echo "Usage: <program> <type> <Image>"

exit

fi

if [ -z $img ]; then

echo "Please select the image"

echo "Usage: <program> <type> <Image>"

exit

fi

if [ ! -e ../config.txt ]

then

echo "Make config.txt!"

exit

fi

source ../config.txt

if [ ! -e ./${type}.nc ]

then

echo "Make ${type}.nc!"

exit

fi

bigmap=$BIGMAP

argR=$ARGR

argI=$ARGI

degPx=$DEGPX

proj=$PROJECT

body=$BODY

runAll=$RUNALL

ver=$VERSION

echo "$bigmap"

echo "$argR"

echo "$argI"

echo "$degPx"

echo "$proj"

echo "$body"

echo "$runAll"

# Make normal GeoTiff

echo "gdal translate"

gdal_translate -of GTiff -b 1 -a_srs ../support/$proj.prj NETCDF:${type}.nc ${bigmap}-${img}-${type}-v${ver}.tif

#Make GeoTiff readable by ISIS

gdal_translate -of ISIS3 -tr $degPx $degPx -r bilinear -b 1 -co TARGET_NAME=$body -co DATA_LOCATION=GEOTIFF -a_srs ../support/$proj.prj NETCDF:${type}.nc ${bigmap}-${img}-${type}-ISIS-v${ver}.lblNote: See "Grid to GeoTIF" for a better program than gdal_translate, since gdal_translate doesn't always write the projection.

Make Pretties

gmt begin GMT_cont gmt set GMT_THEME cookbook gmt grdcontour test.nc gmt end show #gmt grdcontour test.nc -C10 -A50 gmt begin GMT_img gmt set GMT_THEME cookbook #gmt makecpt -Crainbow gmt grdimage test.nc -JM6i -B -BWSnE gmt colorbar -DJTC -Bxa -By+lm gmt end show gmt begin GMT_img gmt makecpt -Crainbow gmt set GMT_THEME cookbook gmt grdimage test.nc $argR -JM6i -B -BWSnE #gmt colorbar -DJTC -I0.4 -Bxa -By+lm gmt colorbar -DJTC -Bxa -By+lm gmt end show

Setup

Use Dropbox spcShare directory

cd /opt/local/spc/ relink ~/Dropbox/spcShare/Tethys .

Then in the working directory

relink.sh /opt/local/spc/Tethys/support . relink.sh /opt/local/spc/Tethys/mapConfig .

How to make DSK from ICQ and MAP

MAP to DSK

Pathway to make a DSK. Convert MAP to OBJ using AltWG tools, then convert OBJ to DSK using SPICE tools.

Example using TRALHL.MAP, to get obj (note: make sure you use the --local)

Maplet2FITS TRALHL.MAP tmp.plt FITS2OBJ --local tmp.plt TRALHL.obj

Now turn OBJ into DSK (note: lbl file is listed below)

<rand> makeDSK$ /usr/local/toolkit/mice/exe/mkdsk MKDSK Program; Ver. 2.0.0, 28-FEB-2017; Toolkit Ver. N0066 SETUP FILE NAME> TRALHL-obj.lbl Reading plate model input file... ...Done reading plate model input file. Generating Spatial Index... Segregating and closing DSK file... DSK file was created. All done.

Example of LBL file. Refer to https://naif.jpl.nasa.gov/pub/naif/utilities/MacIntel_OSX_64bit/mkdsk.ug for more details.

<rand> makeDSK$ cat TRALHL-obj.lbl

\begindata

INPUT_SHAPE_FILE = 'TRALHL.obj'

OUTPUT_DSK_FILE = 'TRALHL.bds'

SURFACE_NAME = 'TRALHL Tethys' ### This can be user defined; see NAIF_SURFACE_NAME below

CENTER_NAME = 'TETHYS' ### Must be spice compatible name

REF_FRAME_NAME = 'IAU_TETHYS' ### Must be spice compatible frame

START_TIME = '1950-JAN-1/00:00:00'

STOP_TIME = '2050-JAN-1/00:00:00'

DATA_CLASS = 1 ### Data class 1 is for shapes with a single radii for each lat/lon

INPUT_DATA_UNITS = ( 'ANGLES = DEGREES'

'DISTANCES = KILOMETERS' )

COORDINATE_SYSTEM = 'LATITUDINAL' ### Haven't yet experimented with other systems

DATA_TYPE = 2 ### As of 22 Nov '21, all DATA TYPES are 2

PLATE_TYPE = 3 ### For an OBJ input use a Plate Type of 3

KERNELS_TO_LOAD = ( 'naif0012.tls' ) ### Not sure if this is needed

NAIF_SURFACE_NAME += 'TRALHL Tethys' ### Here is where you define user surface

NAIF_SURFACE_CODE += 1 ### The above SPICE link doesn't explain this code well; 1 seems to work

NAIF_SURFACE_BODY += 603 ### This is the SPICE code for Tethys

MINIMUM_LATITUDE = 11.715 ### I've played around changing the min/max lat/lon and it doesn't seem to matter much

MAXIMUM_LATITUDE = 29.104 ### At least it doesn't seem to change anything once converted back to an OBJ

MINIMUM_LONGITUDE = 102.452

MAXIMUM_LONGITUDE = 122.322

\begintext

ICQ to DSK

Pathway to make a DSK. Convert ICQ to OBJ using AltWG tools, then convert OBJ to DSK using SPICE tools.

Example using Tethys.txt, to get obj

ICQ2PLT Tethys.txt tmp.plt PLT2OBJ tmp.plt Tethys.obj

To get OBJ to DSK, use the same technique above, except the min/max lat/lon should be -90 to 90 and -180 to 180.

DSK to OBJ

-dsk gives the input dsk, -text gives the output file, -format vertex-facet determines the output format to be in an obj

/usr/local/toolkit/mice/exe/dskexp -dsk TRALHL.bds -text TRALHL_fromDSK.obj -format vertex-facet

Note: To read the obj generated by dskexp in Meshlab, first trim the spaces in front of the "v"'s and "f"'s of the output file.

The SPICE commands DSKBRIEF and COMMNT may also be useful to check the dsk generated.

Notes on extracting information from geotiff. i.e. Entries for OLAF

The below was written prior to utilizing gdalwarp to make the geotiffs. See "Grid to GeoTIF" section for details, but if you use gdalwarp to make the geotiff you can also output a sample xml label that has most (all?) of the information below.

To get the pixel size of a geotiff, use gdalinfo. Command and output of a rectangular pixel size are shown below. Number of pixels is shown by the "Size is 501, 100" where 501 is px and 100 is line. Pixel size (in deg) is given by "Pixel Size = (0.036126000000000,-0.181000000000000)". Note you can also get this number by using the "Corner Coordinates:" of (292.297 - 274.198) / 501 = 0.036126, or (3.115 - -14.984) / 100 = 0.181. GDAL outputs a negative sign in front of the 0.181, I am not sure why.

<rand> test$ gdalinfo -stats LEADEQ-radius-v1.tif

Driver: GTiff/GeoTIFF

Files: LEADEQ-radius-v1.tif

LEADEQ-radius-v1.tif.aux.xml

Size is 501, 100

Coordinate System is:

GEOGCRS["Tethys 2000",

DATUM["D_Tethys_2000",

ELLIPSOID["Tethys_2000_IAU_IAG",535600,54.6530612244898,

LENGTHUNIT["metre",1,

ID["EPSG",9001]]]],

PRIMEM["Greenwich",0,

ANGLEUNIT["degree",0.0174532925199433,

ID["EPSG",9122]]],

CS[ellipsoidal,2],

AXIS["latitude",north,

ORDER[1],

ANGLEUNIT["degree",0.0174532925199433,

ID["EPSG",9122]]],

AXIS["longitude",east,

ORDER[2],

ANGLEUNIT["degree",0.0174532925199433,

ID["EPSG",9122]]]]

Data axis to CRS axis mapping: 2,1

Origin = (274.197937000000024,3.115500000000000)

Pixel Size = (0.036126000000000,-0.181000000000000)

Metadata:

AREA_OR_POINT=Area

lat#actual_range={-14.894,3.025}

lat#axis=Y

lat#long_name=latitude

lat#standard_name=latitude

lat#units=degrees_north

lon#actual_range={274.216,292.279}

lon#axis=X

lon#long_name=longitude

lon#standard_name=longitude

lon#units=degrees_east

NC_GLOBAL#Conventions=CF-1.7

NC_GLOBAL#GMT_version=6.2.0 [64-bit]

NC_GLOBAL#history=sphinterpolate -R274.216/292.279/-14.894/3.025 -I501+n/100+n -Gtmp.nc

z#actual_range={525616.3125,530976.5625}

z#long_name=z

z#_FillValue=nan

Image Structure Metadata:

INTERLEAVE=BAND

Corner Coordinates:

Upper Left ( 274.198, 3.115) (274d11'52.57"E, 3d 6'55.80"N)

Lower Left ( 274.198, -14.984) (274d11'52.57"E, 14d59' 4.20"S)

Upper Right ( 292.297, 3.115) (292d17'49.43"E, 3d 6'55.80"N)

Lower Right ( 292.297, -14.984) (292d17'49.43"E, 14d59' 4.20"S)

Center ( 283.248, -5.934) (283d14'51.00"E, 5d56' 4.20"S)

Band 1 Block=501x4 Type=Float32, ColorInterp=Gray

Minimum=525616.312, Maximum=530976.562, Mean=528625.394, StdDev=890.336

NoData Value=nan

Metadata:

actual_range={525616.3125,530976.5625}

long_name=z

NETCDF_VARNAME=z

STATISTICS_MAXIMUM=530976.5625

STATISTICS_MEAN=528625.39353917

STATISTICS_MINIMUM=525616.3125

STATISTICS_STDDEV=890.33610412375

STATISTICS_VALID_PERCENT=100

_FillValue=nan- Bits per Pixel is 32

- Sample Direction is RIGHT

- Line Direction is DOWN

- Axis Order is Last_Index_fastest

- Data Type is IEEE_REAL_LSB (or at least the ones I make with GDAL are LSB)

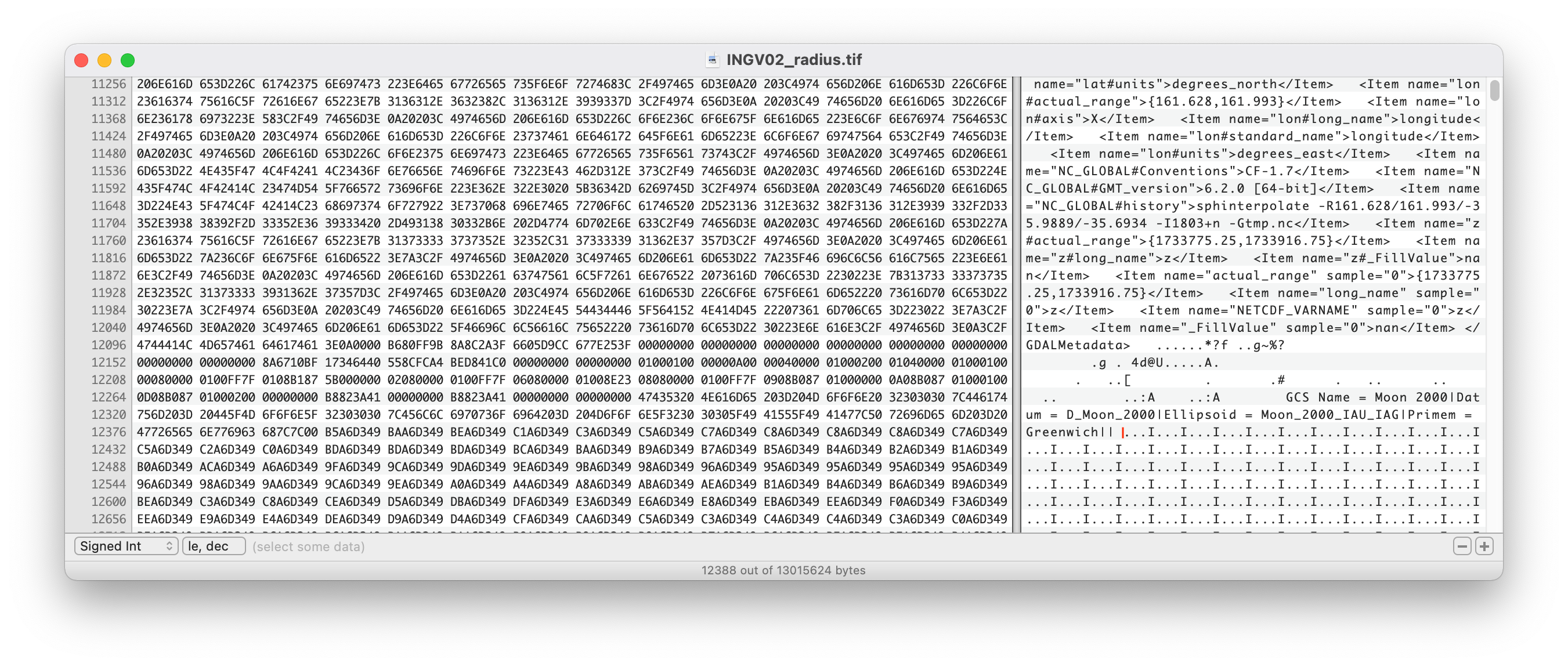

- Header size can be found using Hex Fiend. Notice the red line a bit after the word "Greenwich". That is where you need to click, and then the header size is given at the bottom of the window. Click to see larger image if needed.

- To get Vertical Pixel scale take the second Pixel Size value (0.181000000000000), and radius of the object. V Px Scale (meters) = 2*PI*(Radius in meters)/360*(0.181000000000000)

- To get Horizontal Pixel scale take the first Pixel Size value (0.036126000000000), radius of the object, and Latitude. H Px Scale (meters) = 2*PI(Radius in meters)*cos(Lat in radians)/360*(0.036126000000000)

Notes on extracting information from cube. i.e. Entries for OLAF

Most data can be gleaned from open the cube in the program "vi". Note that to get the cube into BandSequential you may need to run the ISIS program "cubeatt from=<filename1>.cub to=<filename2>.cub+BandSequential"

Object = IsisCube

Object = Core

StartByte = 65537

Format = BandSequential

Group = Dimensions

Samples = 1821

Lines = 1821

Bands = 1

End_Group

Group = Pixels

Type = Real

ByteOrder = Lsb

Base = 0.0

Multiplier = 1.0

End_Group

End_Object

End_Object

Object = Label

Bytes = 65536

End_Object

Object = History

Name = IsisCube

StartByte = 13329701

Bytes = 1069

End_Object

End