TestF3C - Results

Comments

The results show no significant difference in the final CompareOBJ RMS regardless of how poor the viewing conditions are, however a visual inspection of the topography clearly shows the degradation and poor representation that occurs as imaging conditions become qualitatively "worse". As a proxy for accuracy, we can instead look at statistics based on a normalized cross correlation between the generated topography and truth topography. The results of comparing both the target area as a whole, and the individual high resolution maplets to the truth topography more clearly demonstrates the relationship between the quality of observing conditions and the quality of the topography.

It should also be noted that while the cross correlation values have a maximum of 1, comparing two samplings of the truth topography to one another generates a value of 0.833. This is a reasonable result for reasons which are not discussed here, but this is purely to give a metric by which to compare values.

CompareOBJ RMS

CompareOBJ does not appear to be affected largely by the quality of imaging conditions. We have come to realize that RMS is not a sufficient means of evaluating topography for accuracy in and of itself, but rather should be used in addition to other criteria.

CompareOBJ RMS:

Sub-Test |

RMS (cm) |

Optimal Trans-Rot RMS (cm) |

Kabuki RMS (cm) |

N/A |

N/A |

N/A |

|

65.999 |

16.116 |

9.886 |

|

54.171 |

20.513 |

11.075 |

|

63.450 |

21.909 |

10.617 |

|

64.596 |

16.583 |

9.905 |

|

66.583 |

16.845 |

9.253 |

There are a few caveats to the above results that are important to understand. First, we now know that there is a shift of approximately 2m of the coordinate system between the truth model and the evaluation model. This does not have an effect on the topography, just its location in 3D space. This means that the plain CompareOBJ RMS is fundamentally calculating RMS in the wrong place, and thus the values in the second column of the above table are fairly meaningless. Next, it is also known that CompareOBJ's translation and rotation optimization algorithm does not always find the correct location, which is indeed what is happening here. We have used other tools (primarily Meshlab) to verify that these translations are indeed incorrect. This means that the RMS values in the third column above, while closer to the truth than those in column two, are still incorrect.

The values in the final column are the most meaningful RMS values we have as we used a hand calculated initial guess for the necessary translation, and then optimized RMS around that location. It can be seen then that there is no large deviation in RMS between the subtests, but the values in general do increase with degrading imaging conditions. F3C3 was expected to have the worst conditions and had the worst RMS; while F3C6 had the best imaging conditions and had the best RMS.

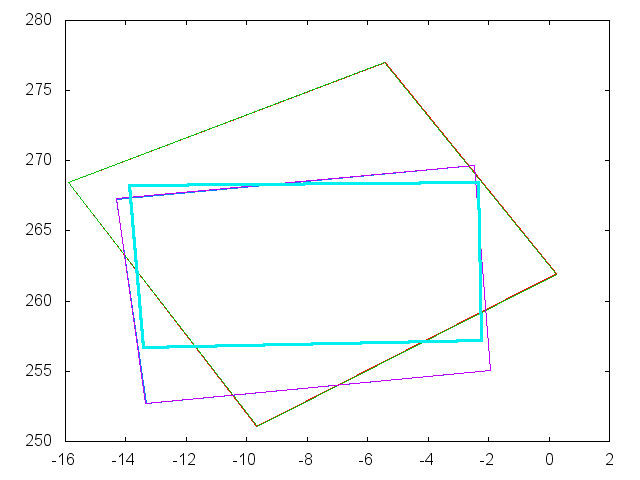

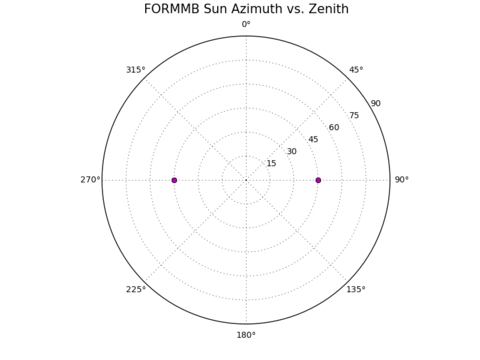

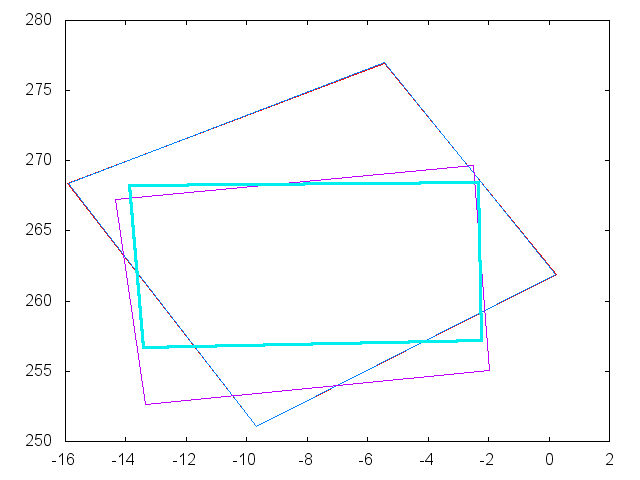

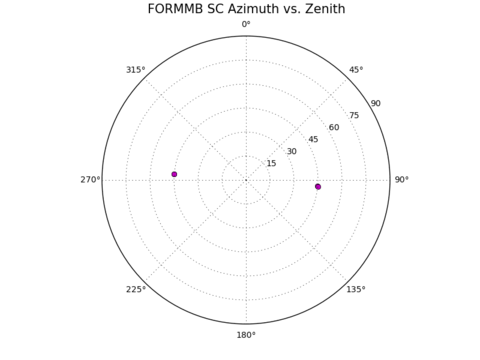

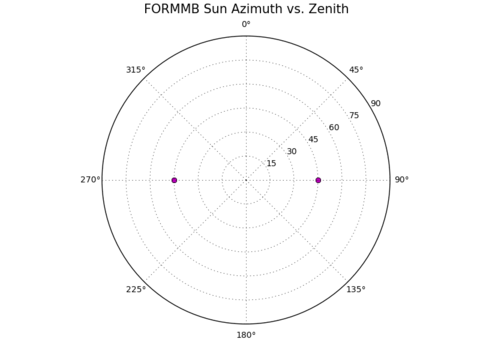

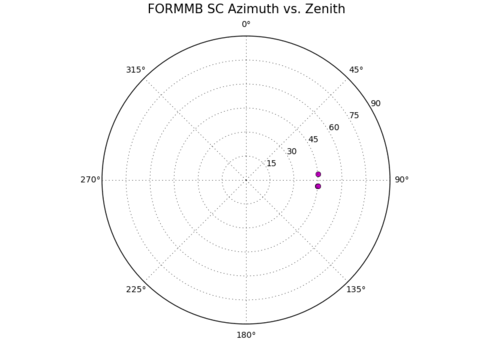

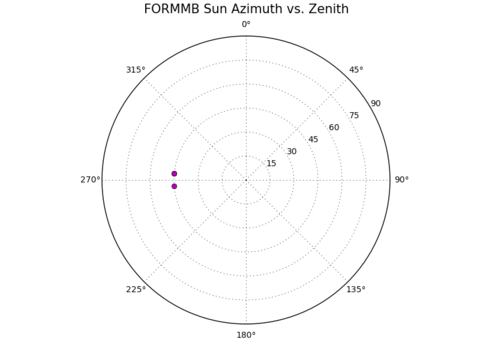

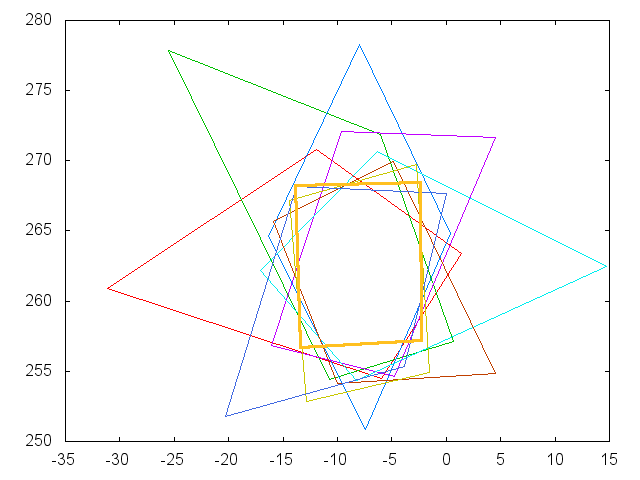

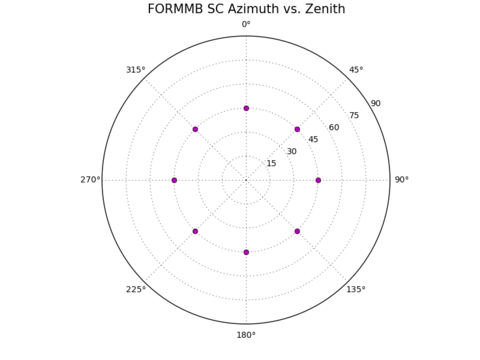

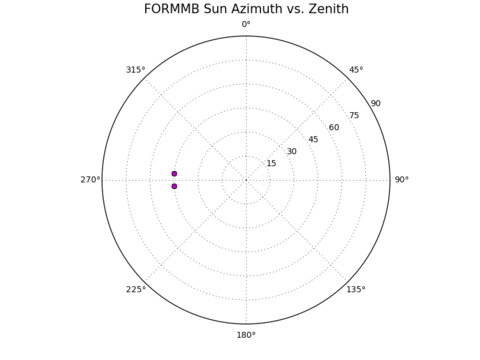

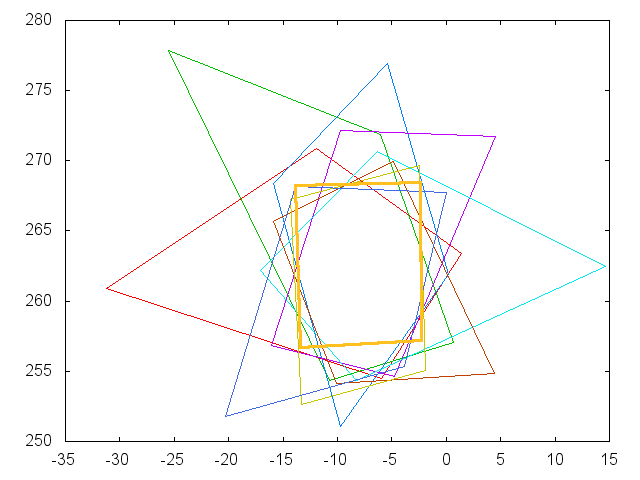

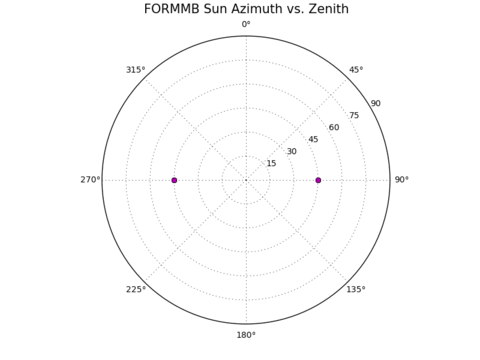

Image Footprints

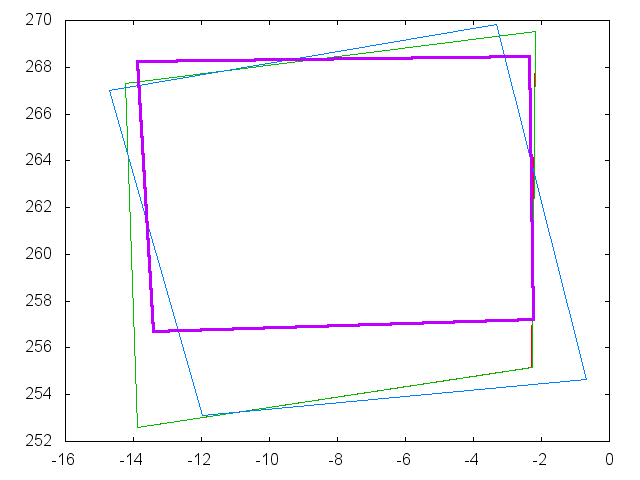

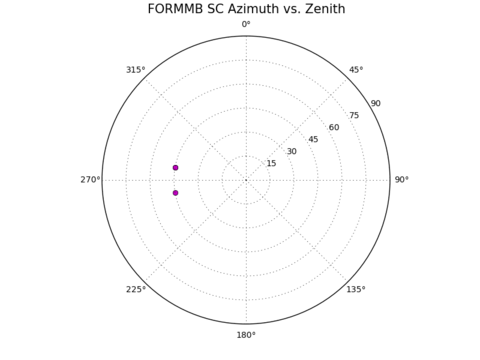

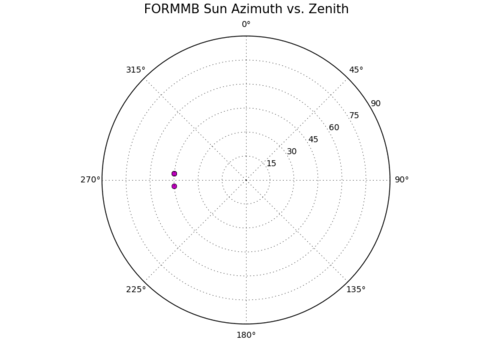

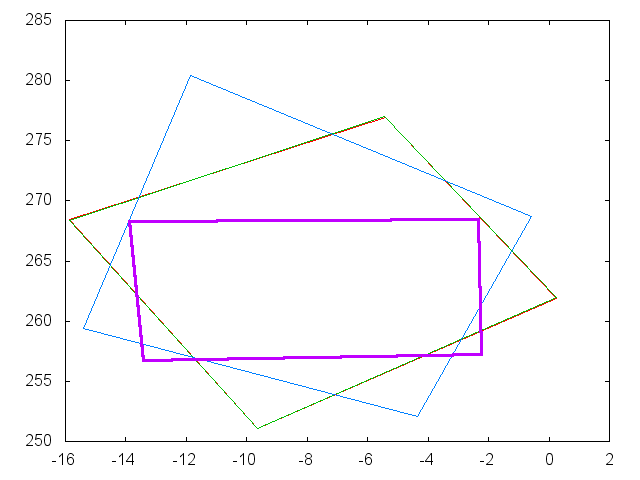

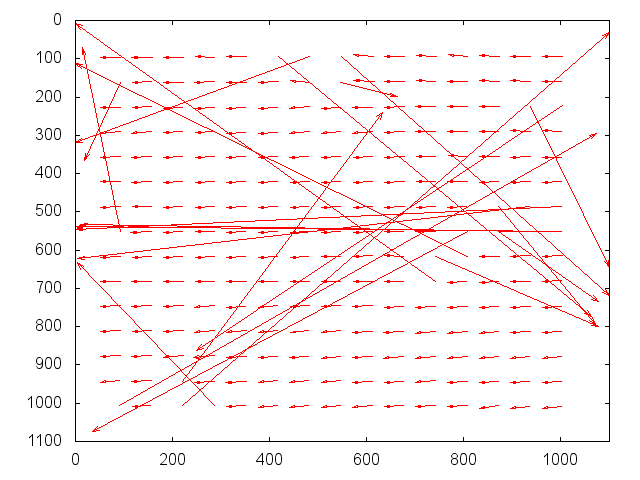

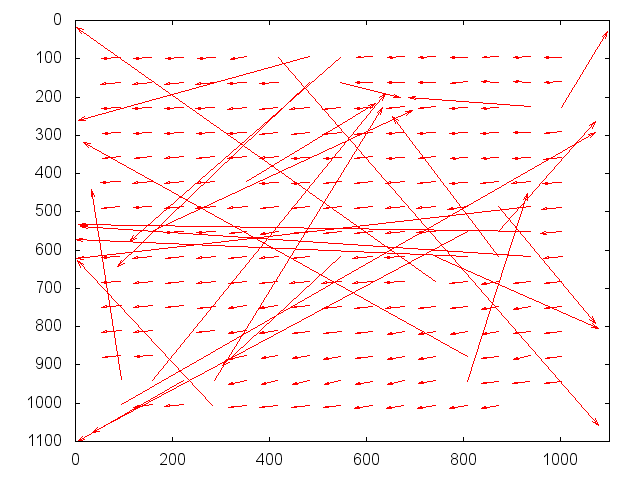

These figures show the image footprints on the surface for each subtest. The bold lines outline the 50x50 m study region. Some images overlap almost exactly, so the total number of images is written along with the figure.

F3C1

Images: 4

F3C2

Images: 4

F3C3

Images: 3

F3C4

Images: 3

F3C5

Images: 8

F3C6

Images: 8

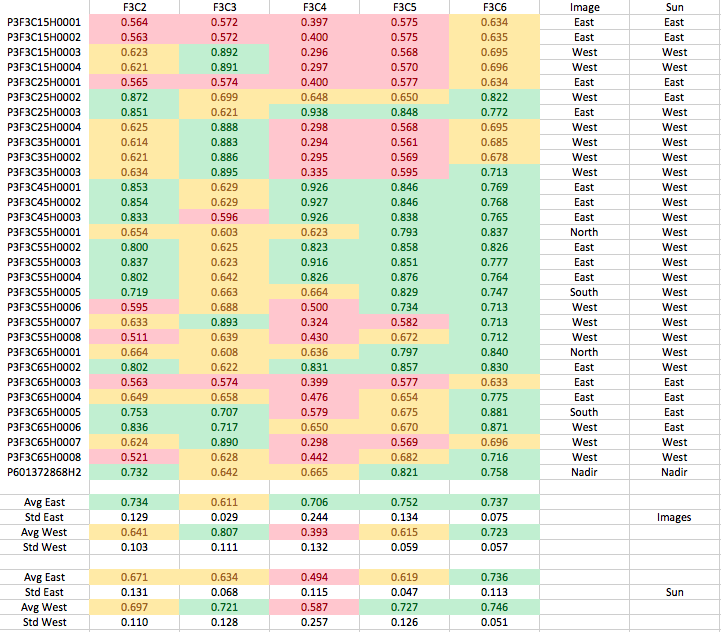

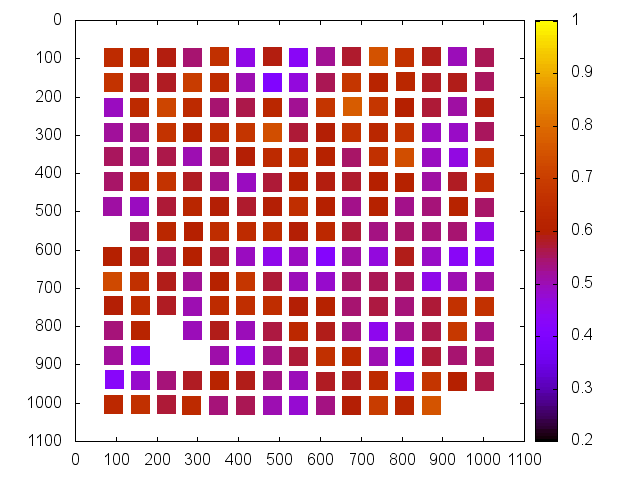

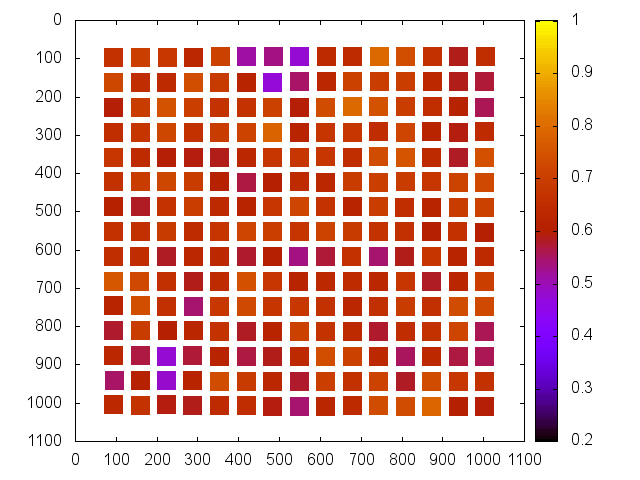

Normalized Cross Correlation

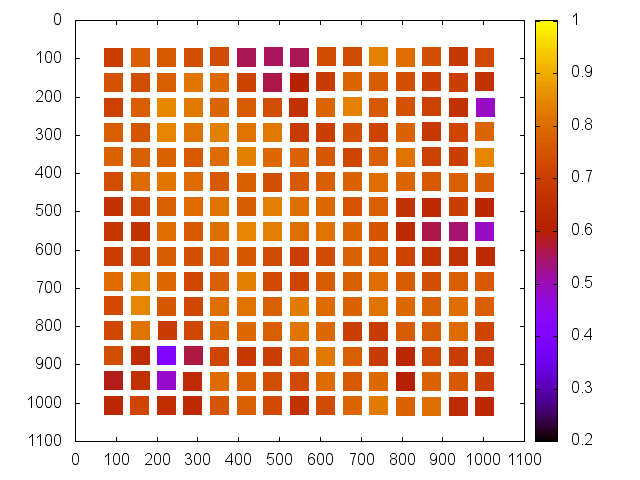

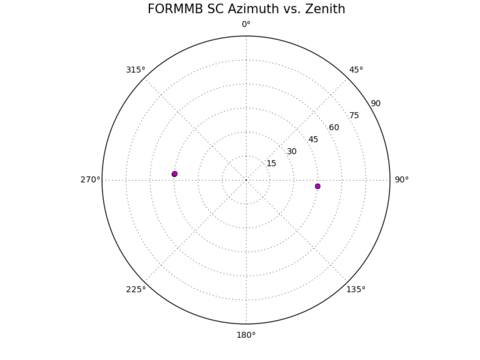

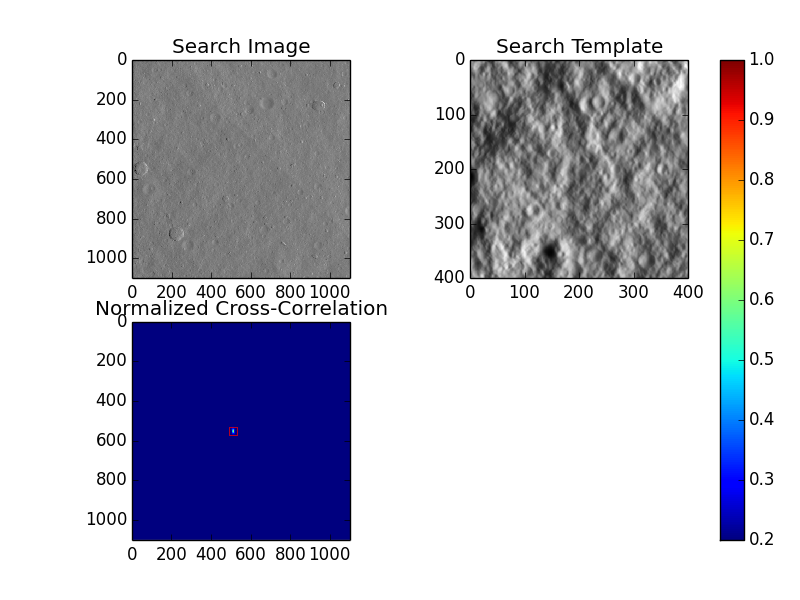

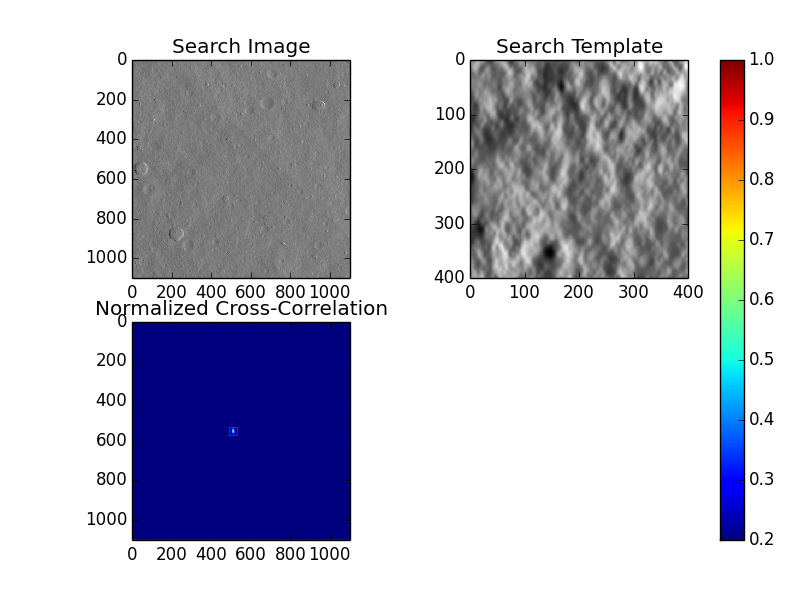

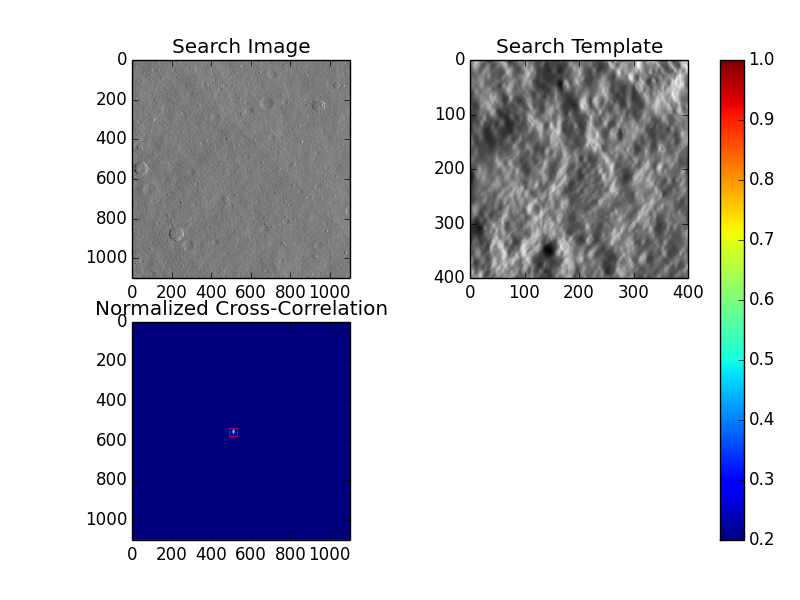

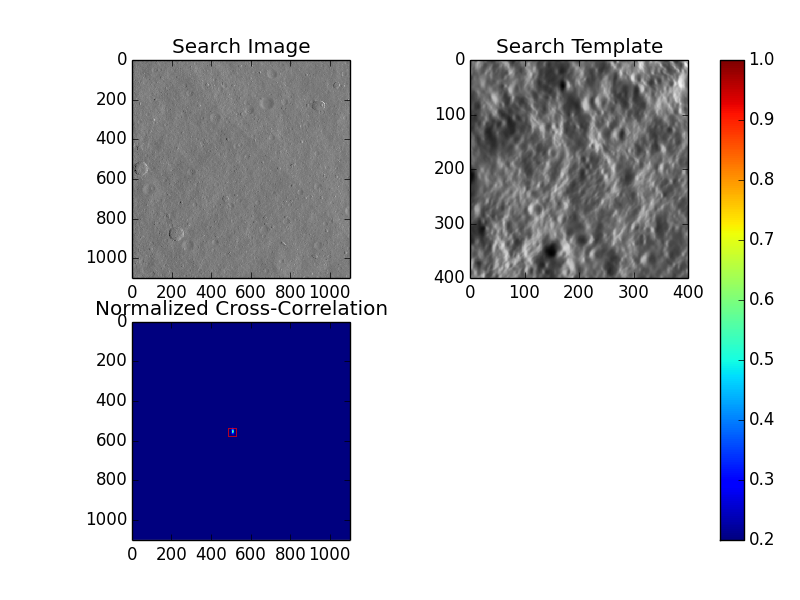

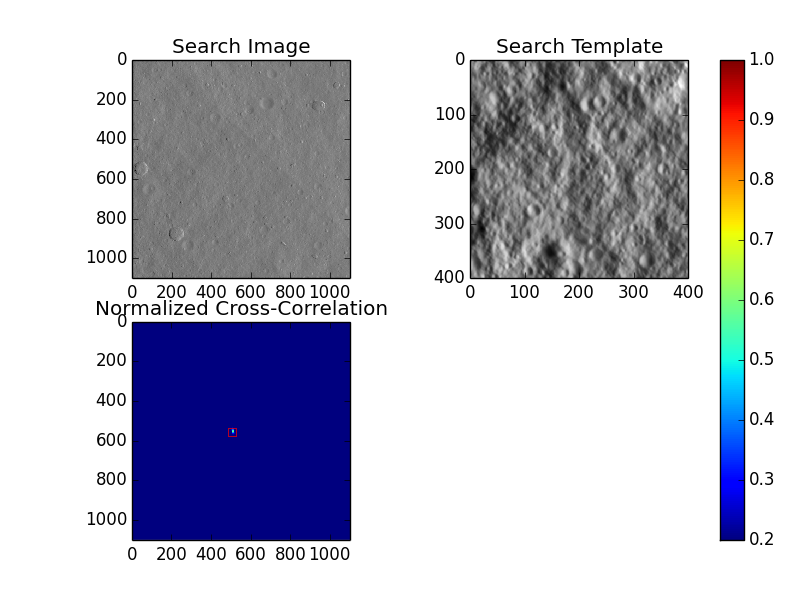

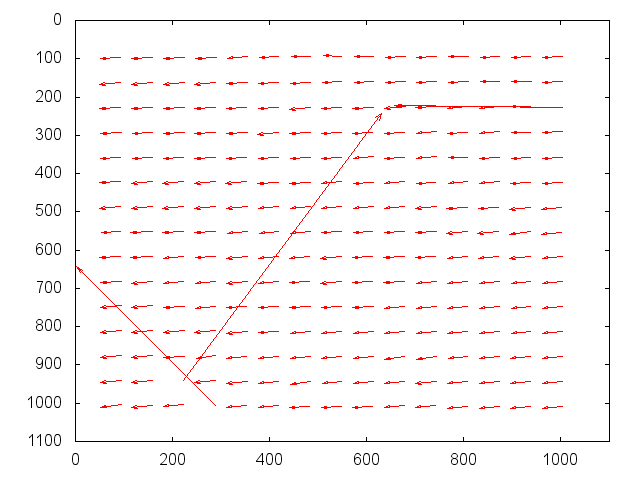

A normalized cross correlation (NCC) analysis was done on the evaluation bigmap (20x20 m) as a whole, the individual 5 cm/pixel maplets that form the 50x50 m study region, and using the test images compared to images rendered from the model using Imager_MG. The outputs of the NCC analysis on the full bigmaps are pictured below with their respective correlation values. Visually it is easy to see from the figure in the upper right hand corner of each plot that the topography is drastically affected by the imaging conditions. Features on the surface move about, and the bigmap clarity is worse for poorer viewing conditions.

Imager_MG Correlations

F3C2

Correlation Score: 0.6940

F3C3

Correlation Score: 0.6238

F3C4

Correlation Score: 0.5996

F3C5

Correlation Score: 0.6664

F3C6

Correlation Score: 0.7650

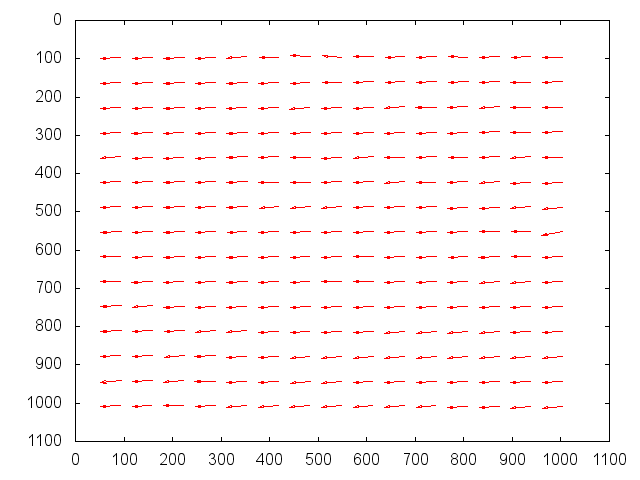

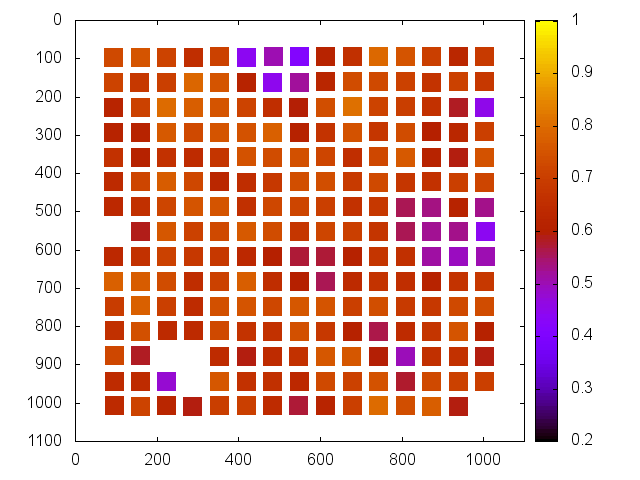

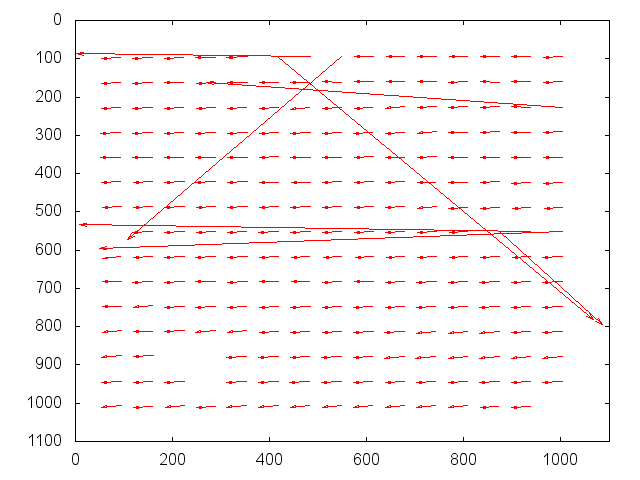

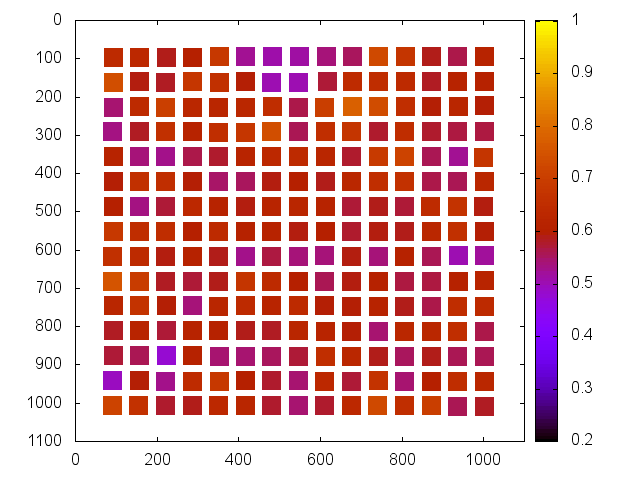

Next the statistics for each maplet in the 50x50 m study region are below. The correlation value was plotted for each maplet in terms of x-y position on the truth bigmap to show where the topography performs better or worse. In addition, the individual translations are plotted to show the distance each maplet had to move to where the correlation was found. Seeing strong agreement in the translations is very encouraging because we know we have a bias error of approximately 2m in our model. From these translations we are able to come up with exclusion criteria for maplets which correlated in an incorrect location. We set a standing 100 pixel translation for a fail criteria, and a 5 pixel deviation from average as a marginal boundary. This is useful because while a maplet may have a high correlation, if it is in the wrong place, this correlation is meaningless. The statistics for each subtest are outlined in the table below, and all values were calculated after the outliers (moved more than 100 pixels) were removed from the set.

Sub-Test |

Average Translation (px) |

Standard Deviation (px) |

Pass/Marg/Fail (%) |

39.658 |

0.903 |

96.82/0.00/3.18 |

|

40.099 |

0.906 |

88.00/2.22/9.78 |

|

37.627 |

1.902 |

82.19/5.02/12.79 |

|

41.820 |

1.074 |

97.78/0.89/1.33 |

|

41.179 |

0.600 |

99.11/0.89/0.00 |

F3C2

F3C3

F3C4

F3C5

F3C6